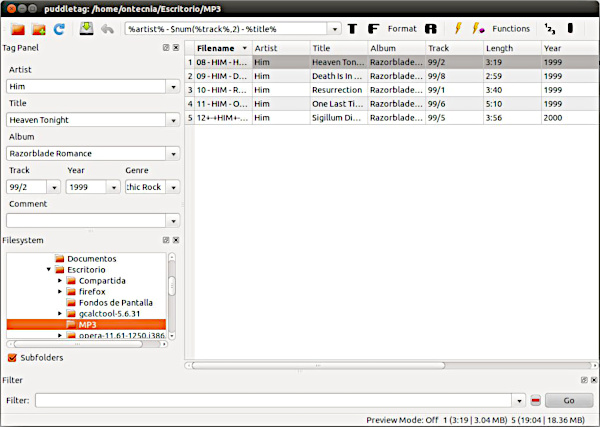

I had a file of about a thousand lines containing the full description of multiple products (audio plugins from Plugin Alliance in my case). The file starts like this:

249

19.99

Mixland

Vac Attack★★★★★

A warm harmonically-rich optical limiter for compression with tube saturation that’s great on vocals, drums, basses, and your stereo bus.

Hardware EmulationsHidden GemsPA MembersSale

89.99

29.99

Woodlands Studio

VOXILLION★★★★★

A stunning and sophisticated, and complete vocal chain in one streamlined workflow. Featuring a high-end blend of a tube-driven preamp, two types of compressors, Nasal Dynamic EQs, Harmonics and more

Hidden GemsLimited Time OnlyPA_EXTSale

279

39.99

ADPTR AUDIO

Metric AB★★★★★

The mastering engineer’s best friend: Compare your mix to your favorite reference tracks. See,hear and learn even more with new expanded features.

Hidden GemsMasteringSaleand ends like this:

FreeDownload

PA FREE

bx_shredspread★★★★★

Intelligent M/S width for doubled riff guitars. Auto-avoid common phase problems, and sound extra-wide and tight!

FREEGuitar & BassHidden GemsM/S InsideMade by BX

FreeDownload

PA FREE

bx_tuner★★★★☆

Accurate tuning for guitar & bass with useful features like volume dimming. Tune up right before you hit the record button.

FREEGuitar & BassMade by BXSale

FreeDownload

PA FREE

bx_yellowdrive★★★★★

Warm to crunch to shred with this classic “Yellow” pedal in plugin form.

Creative FXFREEGuitar & BassHardware EmulationsMade by BXSaleWhat I wanted to do was to split the long file of a thousand lines into smaller files, each containing the lines corresponding to one plugin only.

Fortunately, the plugins were separated by an empty line. After some searching, I stumbled upon a Stackoverflow post of someone having the same issue and I settled on this solution enhanced by this comment:

gawk -v RS= '{ print > ("plugin-" NR ".txt")}' plugins.txtAs explained in the solution, “setting RS to null tells (g)awk to use one or more blank lines as the record separator. Then one can simply use NR to set the name of the file corresponding to each new record”. As explained in the comment, this will fail with the basic version of awk as it cannot handle too many open files at the same time (only 252 files were created with awk). Switching to gawk works great and now I have 277 files which I can further process.